PhD Student, Research Assistant in Explainable AI

Freie Universität Berlin · Berlin, Germany

2022 – Present

I am currently a PhD candidate in the Cybersecurity and AI working group at Freie Universität Berlin under the supervision of Prof. Gerhard Wunder. My research centers on responsible AI, with a particular emphasis on explainability, fairness, and robustness of machine learning and AI systems.

Building on several published works in feature attribution, I'm now actively seeking to extend my research to broader forms of explanations and investigate their utility, including higher-order feature interactions, data attribution, and their practical impacts in model training & debugging.

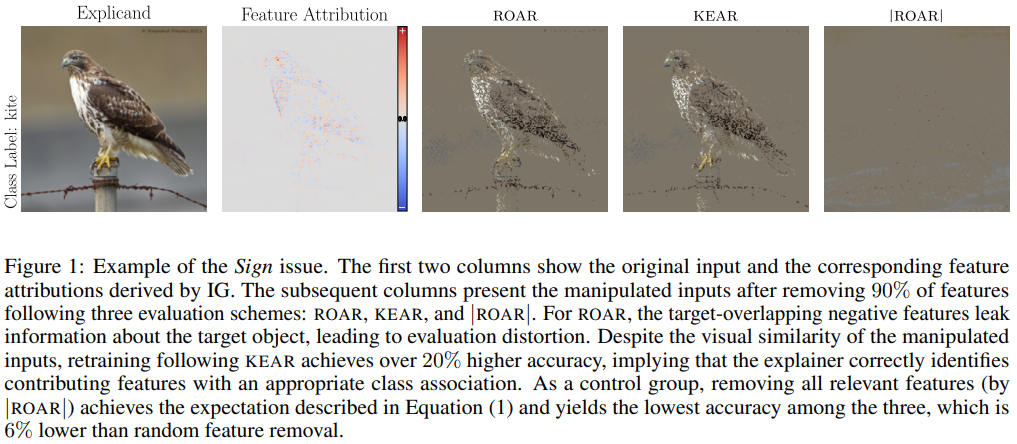

Revisits widely-used retraining-based evaluation for feature attribution, demonstrates its pitfalls, and proposes alternative efficient, distortion-free schemes for assessing explanation quality.

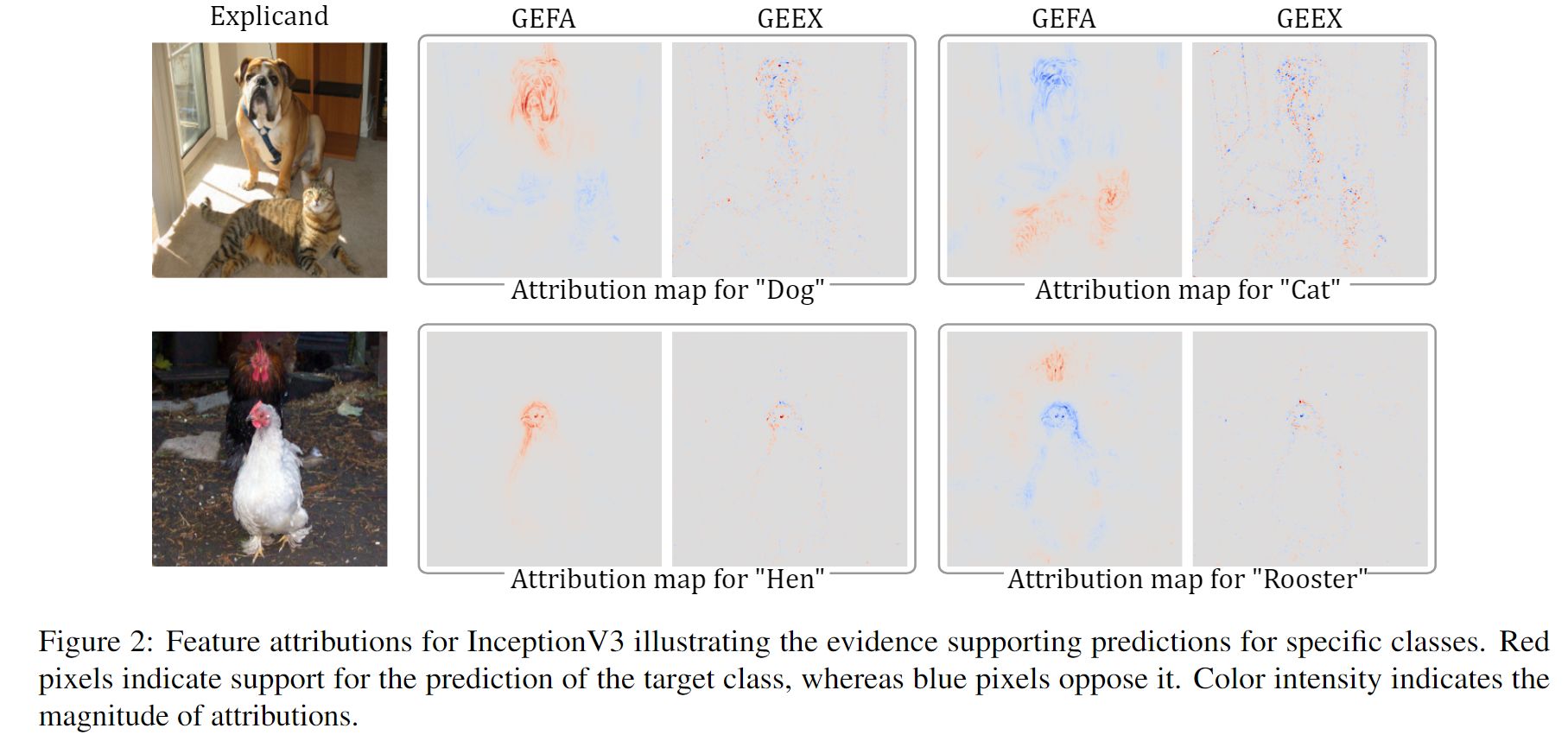

Derives a feature attribution framework based on proxy gradient estimation. The proposed method provides an unbiased estimation of Shapley values, which is generally applicable to different model architectures and data modalities.

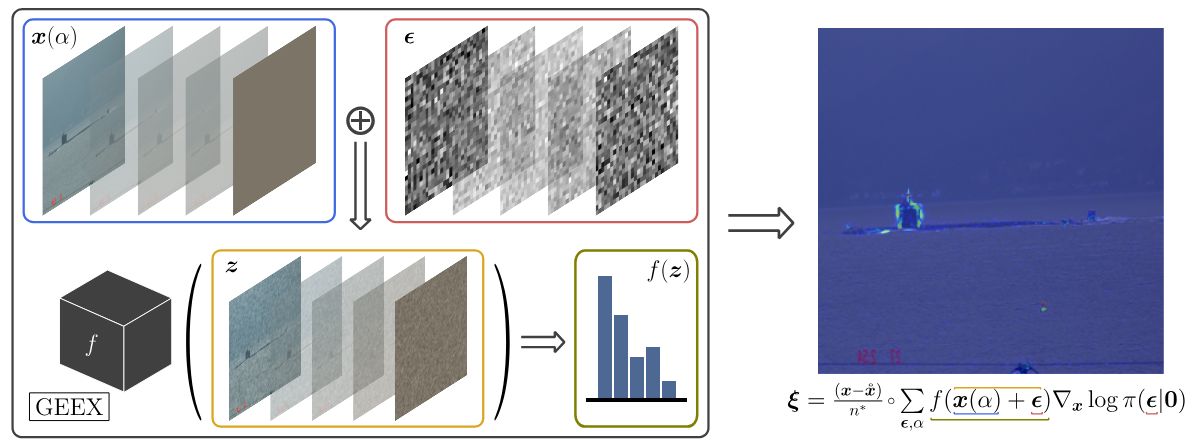

Explores the possibility of delivering gradient-like attributions with only query-level access. The resulting preserves a set of promising axioms of the white-box approaches and demonstrates competitive performance in the empirical experiments

AIgenCY shall explore fundamental research on existing and forthcoming threats for and through generative AI, particularly large language models and foundation models. At the same time, suitable measures are to be developed to improve the detection of and defence against such cyber attacks.

The independent think tank iRights.Lab founded the centre in partnership with the institutes Fraunhofer AISEC and IAIS as well as the Freie Universität Berlin. As a forum for debate in Germany, the CTAI makes the developments surrounding societal questions about artificial intelligence and algorithmic systems tangible.

Artificial intelligence (AI) technologies are the driving force behind digitization. Due to their enormous social relevance, a responsible use of AI is of particular importance. The research and application of responsible AI is a very young discipline and requires the bundling of research activities from different disciplines in order to design and apply AI systems in a reliable, transparent, secure and legally acceptable way.

The demand for automated damage detection in the field of civil infrastructures is high, for economic and safety reasons. This collaborative research project aims to contribute to a further development of automated damage detection in wind energy turbines based on acoustic emission testing (AET) and machine learning.